Sometimes, I notice myself living the same problems that I describe.

As I wrote this I became very aware that the first section was about unglamorous, long-term work (The Challenge of Adoption) whilst the next two sections were fun, surprising and attention grabbing (Automated Applications & Strategic Strings to Manipulate LLMs).

Which of these should I lead with? If you only read one thing, then which matters more?

I’ve tried to practise what I preach, and gone for the less flashy, more important idea up top. If you’ve got time, though, I really recommend reading all three! You can message me to let me know which way you’d have gone and why.

Until then, happy reading

James

If you only read one thing

The challenge of adoption is not new. Digital transformation initiatives have been underway for years, and if we forget these lessons when it comes to AI we do so at our peril.

This month I asked an IfG panel entitled “How should government use AI” which previous technology enabled shifts to ways-of-working they thought were good models for the current one. For me, questions like this are important for helping us progress beyond the current moment of uncertainty. It's easy to identify the negative consequences of any given action (or inaction), but there are, as yet, few frameworks for deciding which of these is the right course.

This month also I saw lots of excellent discussion regarding how we do organisational change, not simply how we do technology. As I’ve said before, if this initiative is owned by IT Departments or CTOs it is likely to have a very different flavour than when it is owned by the organisation as a whole. The technology is novel, but the challenges of technology-caused organisational change, or job obsolescence has a similar feel to transformation waves of the past, and so we should learn from them.

Pivotl hosted an excellent event to launch Alan Brown’s new book, which looks at how organisations can move beyond experiments to AI at scale. Link.

James Plunkett lamented the continued underplay of the challenge of adoption of technology, particularly in government where digital transformation is still very much incomplete. Link.

Reform (the think tank, not the party) argued for change management as a focus, with transformation funded explicitly, rather than squeezed to protect frontline services. Proofs of concept, they said, are cheap financially and politically. Stopping other programmmes to scale pilots up, is not. Link.

Steve Newman was more optimistic, hoping that AI’s arrival might be the wake up call needed to fix systems that already weren’t working. Link.

For me, these discussions are best summed up by two key principles. Firstly, technology-caused disruption is nothing new, so let us learn from previous experience where possible. Secondly, current AI systems are not an end point, there is more tech-caused change to come.

Your organisation needs to be flexible, adaptive and able to respond. If you’d like some help on this journey, well, that’s what we were set up to do.

Contents

If You Only Read One Thing

What Is GenAI Good For?

Job application arms races

SEO for LLMs

Replacing Software Engineers

Timelines of improvement

How To Successfully Integrate GenAI With Existing Organisations

Task selection: avoid brittleness

What’s on the to do list

Good Work In Government - Education

Our Recent Work

How to use technology in the recruitment process

Why the right people and skills are vital for successful AI adoption

Zooming Out

Professional services, lobbying and legal barriers to entry

Robotics

Learning More

Newsletters

Case Studies: CV Screening

Manufacturing, Supply Chain & Logistics

Technical Lessons Learned

The Lighter Side

What Is GenAI Good For?

Job application arms races. At the institutional level: OpenAI has partnered with Indeed to personalise invitations to apply. WSJ has a long read on the challenge of filtering AI-written resumes. On the ground: a viral Reddit post (itself written and amplified by AI) claimed to have got 50 interviews, from 1000 automated applications in just 24 hours. Meanwhile, a viral job advert offered to hire 200 software engineers this week (but was actually clever content marketing for a platform which uses AI to conduct first round interviews). It looks like we are heading full steam ahead towards “My AI-recruiter reads your AI-written application” which would be something of a hellscape. Two models I saw this month for how we’ll escape it. Friction, in the form of pay to apply (which changes the dynamics for spamming). Or the emergence of walled-gardens, where instead you only hire from vetted private members clubs - and they say there are no new ideas under the sun…! OpenAI-Indeed. WSJ. Reddit and Friction. Viral Job Ads. Walled Gardens.

SEO for LLMs is coming. If those acronyms mean nothing to you then I slightly envy you. SEO, or Search Engine Optimisation, is the deliberate effort that organisations go through to come higher up Google search results. It’s somewhere between a dark at and a heavily funded technological war, and the outputs of AI chatbots are the new battlefield. Put simply, if someone asks ChatGPT for a recommended supplier, then is it going to suggest you? How do you increase the odds that it will? A paper this month found that by including strategic (but incomprehensible to humans) text sequences on web pages, which were subsequently harvested to train AI models, you could manipulate the answers these chatbots gave. A NYT journalist, trying to rehabilitate his “according to LLM” public perception (the story is wild, well worth reading) found a network of consultancies who are starting to offer this as a paid service. Edelman (one of the biggest PR firms globally) released an AI Landscape for Communicators report this month, but strangely this one didn’t get a mention. Deliberate? Paper. One Journalist's Wild Account. Edelman.

Even more than it was when you started reading this email. The rate of capability improvements of the underlying models is astonishing and the developers expect more to come. Video: performance over time. Why people continue to expect progress. The Gospel according to Sam.

Doing work that otherwise would have required software engineers. Often this isn’t writing code, but rather knitting together the legion SaaS tools that proliferate across organisations. I saw a hugely impressive demo from Replit this month, allowing you to use a simple chat interface to integrate several platforms for automated outbound marketing (expect your work email inbox to flood). Klarna have gone further and announced they’re rebuilding this capability in house, so as to cut Salesforce and Workday altogether. A joint paper between Microsoft and several Ivy League schools found a 26% increase in developer productivity. But two notes of caution, which we have discussed before:

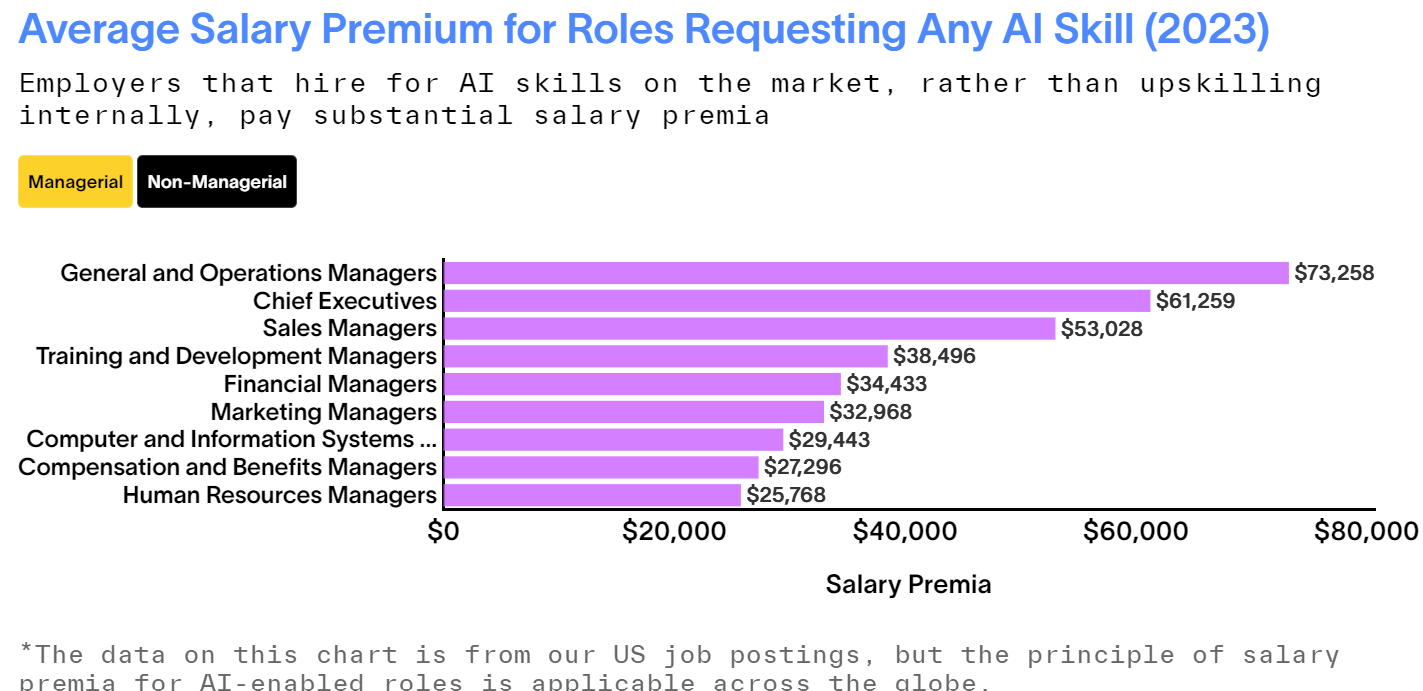

The salary premium for people with the skills to get up and running with AI tools is high (see the chart)

Unless you’re confident you’ve got them in house, you probably don’t. As a friend building an LLM-powered startup told me this month: “Most organisations think their workforce is Deloitte, when in reality it looks more like Tesco”.

Scope your ambitions accordingly.

Replit demo - 1hr workshop video available on request! Klarna announcement. Developer productivity. Chart.

How To Successfully Integrate GenAI With Existing Organisations

Choose tasks where being mostly right is more important than occasionally being wrong. How do you choose use cases for AI tools when they keep getting things wrong in unpredictable ways? A resilience mindset. Prioritise tasks with low brittleness, where there is tolerance for things going off course without something snapping. Rather than starting with automated decision making or customer response, we encourage teams to first experiment with advisory or prioritisation functions like:

From the data you have access to give me a shortlist of reasons why sales are down so that I can investigate further; or,

Give me a summary of what themes my target customers are discussing

For these tasks, if 90% of answers are valuable, you’ve come a long way - so long as you can quickly rule out the other 10%. For “brittle” tasks, you have to spend masses of time trying to catch the remaining 10%, something even the tech giants are struggling with. Prioritisation and advisory tasks can still have a big bottom line impact, supporting front line teams to go from transactional support to concierge like advisors. It’s one of the reasons the most creative industry leaders aren’t talking about automation, but rather LLMs being used to make people feel special as a service!

Automation will shift how far down our “to do list” each of us gets - but not always the same way. What is and isn’t possible at work is often a question of resources, not a fundamental limit. I find this a helpful way of thinking about how the work of an organisation shifts as employees adopt new tools (see If You Only Read One Thing):

Some people will have time freed up, and will get to the next value add activity that previously got crowded out. This next task might be long term value add (like learning a new skill) or less valuable, hour for hour, than what they were doing before.

These new activities will generate work for other people in the organisation. If I’ve suddenly got time to research and implement a marketing strategy in a new sector that will create more work for teams that do onboarding, or customer support.

Some things were too painful to do before - maybe reading research reports or conducting scenario planning exercises - but are made more feasible if you can instead read summaries or automate the legwork. We should expect to see demand for these inputs go up and they might come from outside the company, but they might also come from within.

Lastly, learning about how to best use AI tools is itself a task on your team’s to do lists. You might want to drive adoption through the organisation, but first you’ll need to check whether teams have time to make the transition and, if you free up a few hours for them to learn, whether they’ll instead use the time for something else more urgent instead!

Software creating labour demand. Implicit assumptions and time constraints.

Joined up thinking across the UK Department for Education. An excellent example of balancing technical feasibility studies, with learning about attitudes from teachers, students, parents and potential suppliers. They’ve also just announced the creation of a Data Store that will allow suppliers a better route to creating useful tools - and… because they’re trained on the same data it will be easier for schools to compare offerings! Research. Announcement.

Our Recent Work

How to use technology in the recruitment process. Link.

Why the right people and skills are vital for successful AI adoption. Link.

Zooming Out

“AI can’t possibly do what we do”, many professional services cry. But is that a statement of empirical fact? One of legal realism? Or an emotional reaction to a future too troubling to imagine? Defences to automation are rarely more effective than government regulation and - for my doctor and barrister friends who sometimes read this - professional standards and a closed shop are more of a continuous spectrum than category distinction. No wonder, perhaps, that lobbying on AI has taken off then, and not just from tech companies. Ben Evans on Efficiency. Lobbying Chart (below).

Robotics improvements will be required for any of the AI maximalist claims to come true. Improvements are somewhere between steady and remarkable, spurred by LLMs helping to make instructions comprehensible to robots. As the LLM said to the robot who was trying to learn to catch, don’t take your eye off the ball. Video.

Learning More

Excellent newsletters. It’s been a while since I updated the list of newsletters I recommend, though anyone who clicks through the links will recognise these names already. If you don’t already then check out:

Implications by Scott Belsky (Chief of Strategy at Adobe), which as it says looks at the consequences of AI, not the news.

Brainfood by Hung Lee is for the recruitment industry, but an amazing weekly source of provocation on Future of Work and shifting workplace impacts of AI (and more!)

A longer list of recommendations from Armand Ruiz, VP of Product for IBMs AI Platform. Whilst I don’t follow all of these there’s plenty of overlap.

Case Studies: AI and CV screening, when it goes wrong. Link.

Manufacturing, Supply Chain and Logistics. How is AI being put to work in supply chains, particularly where there are high regulatory requirements (like pharmaceuticals, or foods)? A great podcast overview. The quick answer: mostly classical AI for scenario planning, with GenAI as an interface layer. Podcast.

Two sets of lessons from a year of building with LLMs:

Technical: Long form guide. Top tips summary.

Getting the team on board: Atlassian.

The Lighter Side

In a world of AI-generated fakes, can you tell the difference between real and fake AI companies? Link

Wow. Link

You might be using AI behind the scenes, but don’t worry it’ll never give you up, never let you down… Link