AI Digest - June 24

What matters to organisational leaders each month

If you only read one thing

Automation removes two key stabilisers: social norms and positive friction. Lower trust risks being the consequence.

We are at the start of a new wave of automation. We are about to introduce software that efficiently emulates a person, but with none of the time constraints and even fewer of the social qualms.

Humans behave differently when they feel reduced responsibility for their actions. It’s why people are more likely to act out in online forums, or behind the wheel of the car. A similar dynamic happens when people use representatives - people are willing to tolerate outwardly harmful actions on their behalf that they wouldn’t countenance doing themselves. By contrast, in person interactions require people to face judgement for their choices. Even where someone might gain an advantage by breaking the rules, social pressure is often enough to keep them in check.

A second major stabilising force is friction. There are plentiful ways for consumers to get something for free, from leaving without paying, to abusing right to return policies. In most circumstances, for most people, the time & effort cost of doing so is sufficiently high that they won’t bother, even if they don’t expect to get caught. This is what allows companies to make generous, brand building offers: “Didn’t enjoy this product? Simply bring back the empty packet for a no questions refund”. Good will grows from such trust - but trust is gradually earned and quickly broken. Once an equilibrium switches to a low trust mode, there’s no coming back.

These forces allow many social processes to flourish. There are few barriers on who can submit a job application or a Freedom of Information request; customer complaints are handled in good faith; lawsuits are typically reserved for high value disputes, whilst smaller cases are handled less formally. In contrast, digitally outsourced transactions replace trusted relationships with rules based ones.

Digital automation undermines many longstanding processes, often without being obvious until a system is overwhelmed. Banking relies on building trusted relationships with depositors, many of whom still visit branches. Going online-only promised significant cost savings, but at a hidden cost. As Silicon Valley Bank found last year, a business model based on trust breaks down if your customers are able and willing to leave you from the comfort of their phone screens. Bank runs have happened before, but the speed of SVB’s collapse was unprecedented, with nearly 80% of deposits withdrawn in just 2 days. When interactions are less human, what matters isn’t brand loyalty, but switching cost.

Dozens of other interactions, where social norms and friction provided a platform for good faith interactions are being threatened. Recently we have seen:

Someone in Australia file over 20,000 noise complaints in a single year. Link.

Scammers use ChatGPT to enrol fake students in distance learning courses in order to claim the student financial support. Link.

Online advertising upended as AI generated content floods search engines. Link.

Job applications automated with walkthrough guides circulating. Link.

And so a list of questions to ponder for organisational leaders over the next few months:

Where in your business is the equilibrium only stable because of friction and social norms?

What mechanisms are in place to monitor and stop abuse, whether human or automated?

How will you make things harder for adversarial users without making them meaningfully worse for those you exist to serve?

Who in your organisation is in charge of watching for this behaviour change?

The Big Rule Adjustment. Security vs Usability. Declining Tolerance for Friction. SVB Users Flee. Finally, my local coffee shop - who had to turn off the digital loyalty programme when people started tapping 10 times.

Contents

If You Only Read One Thing

The Big Rule Change

Contents

What Is GenAI Good For?

Increasing The Reach Of Decision Makers

Doing The Job When You Don’t Want Flair

Adoption, Mitigation & Best Practice Survey

How To Successfully Integrate GenAI With Existing Organisations

Prototypes And Breaking Silos

Building An Approvals Process

Gender Gaps In Workplace Adoption

Zooming Out

Consumer Adoption - Apple Makes Its Move

Agents

Cybersecurity

Learning More

Training Courses: Doing Actual Things In A Business With Ai

Half Year Roundup

The Lighter Side

What Is GenAI Good For?

Decision makers can reach for massively more context. Working with structured data is comparatively simple, so much so that “show me the data” has become a Business School maxim. The most efficient organisations of the modern economy have developed ways of analysing almost anything that sits within a database, bringing maximal decision making power to a relatively small executive group. But this data makes up a staggeringly small amount of information within an organisation (only about 10% by storage size, by some estimates). Emails and contracts, powerpoint presentations and customer call transcripts, all were largely inscrutable. If you wanted to understand an area like this, you had to ask an expert for their subjective impression. This is poised to change. Decision makers with expertise in one area can access and query information from other domains on demand, without the friction of finding someone who works there, if anyone does at all. Wielded properly, this will be a huge increase in the legibility of organisations from the centre and give high agency employees masses of context in order to become that much more efficient. Last month we discussed Failure Demand, where providing poor customer service leads to more demand on an organisation, not less. One reader pointed out that, for the first time, we now have language processing tools which can proactively monitor customer interactions and comprehend why such misunderstandings arise. Executives will soon be able to understand for themselves where the customer service function is failing, rather than relying on the word of the Head of Customer Service alone. Knowledge is power and LLMs promise a powerful new source. Unstructured Data. Podcast: Collapsing the Talent Stack. May24 - Failure Demand.

AI outputs lack character. We are starting to see a (largely predictable) backlash against AI content. Given GenAI is most commonly used in Marketing and Sales (see below) this is a trend savvy companies will note. After a decade of campaigns rejecting the idea that there is a single “norm” to aspire to, it’s no wonder that models trained to produce an average response feel a little lifeless by comparison. So we see Dove committing not to use AI to produce images of women, the rise of an LLM-Free quality mark, papers showing the rise of groupthink as AI tools are adopted and GenZ driving adoption of the word “mid” - for anything that is not very special. Organisations have two responses - actively prompt to produce diversity (Dove have excellent resources on this) or keep GenAI tools focused on areas where creativity is the last thing you want. Nobody wants sentiment analysis with style, or an NDA that’s a bit quirky. Prioritise use cases accordingly. Dove Campaign. Dove Resources. LLM-Free Mark. Groupthink. “Mid”.

Adoption rises, risk mitigation fades. 50% of companies report having GenAI systems in at least two business functions, up from 30% last year, with the median project taking 1-4 months from inception to implementation. HR has seen the biggest cost reductions, whilst Supply Chain Management the biggest revenue gains. Despite this Sales and Marketing is the most popular use case, likely because of the relatively low modification needed to existing processes. Inaccuracy of responses is the only risk that companies are spending more to mitigate than 12 months ago. A small subset of companies report over 10% of EBIT was attributable to GenAI and these organisations are setting the pace on Risk, Strategy, Talent & Operating Model best practices. McKinsey.

How To Successfully Integrate GenAI With Existing Organisations

Don’t build prototypes in isolation - or they will stay just that. Strikingly often we see technology teams lead on building the first LLM powered system in an organisation, only for the project to fail to progress. An excellent podcast this month reminded me of the language and fundamental tenets of Scientific Process Management, which lays out frameworks to think about the Processes and People in organisations. Too often these are neglected, so tech-led prototypes of the form “wouldn’t it be very cool if someone could do X” fail to take account of the fact that real colleagues rarely do. The key is to build these projects with cross-functional teams who understand the organisational context and can shape what gets built. Often this interlinking work is abandoned, however. Disparate teams speak their own jargon and work differently, so achieving cooperation is a struggle and at first seems to achieve nothing but heat. Consequently, rare individuals who can communicate across silos often burn out an HBR paper recently showed, so it's unsurprising that organisations often use consultants (including us!) to help with this work. Leaders - with or without external help - should aim to train their teams to work through these knotty problems. The interconnectedness itself will be an ROI, and the projects that result will actually work in your organisation, rather than simply in a flashy demo. Podcast: Breaking Boundaries. Podcast Writeup. Cross-silo burn out.

Set up dedicated processes for procuring and approving tools. If you don’t build, you buy. Doing so with new technology introduces many new challenges. Ben’s Bites (see Learning More below) published a phenomenal Case Study this month which walks through the structure of a functioning approvals process. Highlights include:

Centralise what needs centralising: Contract term approvals, client contract reviews, Data Protection and IP Protection. Push effectiveness decisions to the edge.

Maintain multi level approval lists for tools: Approved in all circumstances, approved for specific or one-time use, in pilot for a single project, awaiting approval.

Introduce fast lanes for re-approving new software as additional features are added

Use well resourced consultant teams to help with adoption and safe & effective use of new tools

Link. See Learning More below if you hit a paywall.

Lack of explicit training is driving a large gender gap in workplace adoption. The best large-scale adoption survey, with 100,000 workers in 11 exposed occupations linked to comprehensive government data, gave a picture of use in Professional Services. Unsurprisingly younger and less experienced workers are more likely to adopt, along with high achievers. Somewhat surprisingly there was a large adoption gap (20 percentage points) between men and women, even within the same role (and controlling for other factors). This wasn’t due to awareness or optimism about the likely impact, instead female employees were more likely to report lack of training as a barrier. Given realised productivity gains, ensuring all employees are equally confident as well as able to access GenAI tools will be a key pillar of workplace equality. Paper. Podcast.

Zooming Out

Tech giants are all in on mass market adoption. Last week Apple made its move. Apple announced plans to bring LLMs to the Operating System of iPhones (at least if you have the recent model). Their focus on Privacy and Security as a brand asset was part of the reason this was so much more successful than Microsoft's move to embed LLMs in laptops just weeks earlier. Almost 1.5 billion people use an iPhone worldwide and this is a 3rd piece of the puzzle which will drive consumer adoption of AI tools. Office CoPilot is putting LLMs in the most common software that people use. Apple Intelligence is putting LLMs in the most common hardware that people buy. OpenAI has made access to the frontier models free worldwide. Remember that, until last month, a staggeringly small proportion of people had used the best models available, because until then they required a paid subscription. The best comparator is the shift to using (and providing) and providing mobile apps, which took several years from about 2010. This transition will be quicker, because millions of consumers can start using these capabilities with just a software update. If your AI strategy looks only at what you can do with AI you’ve missed half terrain - what can 1.5 billion potential customers or competitors with AI do to you? Apple Announcement. Apple Analysis. Microsoft Recall - Recalled.

The next two paragraphs are more technical than usual for this section, so feel free to skip if that doesn’t interest you. That said, the two hot topics in AI Development right now are:

Agents! An excellent summary video explaining how much progress has been made (lots) and how much remains to do to make useful products (also lots). As agents arrive, remember, when something becomes more intuitive it almost always means that someone has made decisions for you. Apple are the masters of this, one of the reasons their products are so easy to use is because there are so many defaults - which Google pays $20bn / year to maintain. Link.

Cybersecurity. Both of models and from model-powered attacks.

On the defensive side, a 160 page treatise from a former OpenAI Safety engineer got huge attention, particularly for detailing the number of successful state-backed hacks of major tech companies and pointing out how weak security at OpenAI is. More important than the content (which was widely known) is the coordination mechanism. Now everybody working in the industry knows everyone else is talking about it too. OpenAI put a former National Security Advisor on their board a few days later. The Paper: Situational Awareness. Some successful attacks. OpenAI Responds 1. OpenAI Responds 2.

On the offensive side, a new paper claims a team of LLM-powered agents successfully and independently exploited 8 out of 15 zero-day vulnerabilities, that is ones that haven’t been reported before (and so potentially included in model training). Pushback has pointed out this is still quite hypothetical, but if your CISO isn’t examining the implications they should be. Paper. Pushback.

Learning More

Training courses for actually doing things with ChatGPT from Ben’s Bites. Highlights include using AI for:

SEO optimisation

Drafting customer support emails

Building user personas

As well as courses, the Pro subscription also includes weekly in depth case studies with executives who share the highs and lows of transforming their business to make use of AI (see above). Link.

Note: Ben operates a paid referral scheme for sign ups, but the link above is not that, it's just the link to his regular web page. I committed to not recommending courses for cash and will stick by that. The referral to Ben's newsletter and courses is because I think they’re well worth it and I get a lot of value from my subscription! Happy to show you a demo before you sign up if that would be helpful, or put you in touch with Ben himself.

Half Year Round Up, from Ethan Mollick, the best AI commentator out there. Less in depth than his usual pieces, but an excellent overview of where capabilities have reached, if you’re looking for something to share with colleagues who don’t follow AI closely. Link.

The Lighter Side

It’s cruel to laugh at a person when they’re down, but Google is not a person, so tuck in. A curated list of the best-worst answers from the experimental Google AI search results. Link.

Getting one over on unfair landlords is definitely a positive in my eyes (hence the section choice). But also, read this through the lens of the Big Rule Change discussed in If you only read one thing. Once you start looking, the examples are everywhere. Link.

Did anyone have “the last few years were the high point for productive online discourse” on their bingo cards? Maybe you should have. Link.

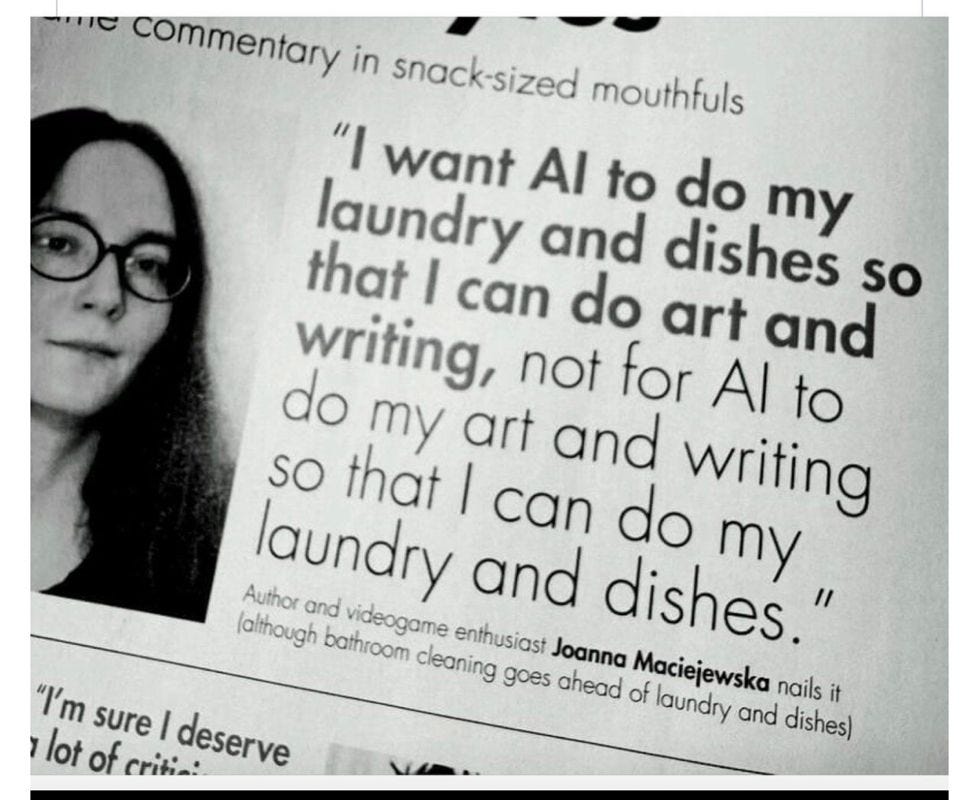

Hard to argue with this sentiment. Link.