We have said for some time that many organisations are getting the balance wrong between “doing AI” - by which they mean procuring or building something new - and “experiencing AI”. By the latter, we mean adapting to the changes that will be felt as customers, suppliers, competitors and employees get access to AI tools without you doing anything.

Of those actors who can influence our organisations, Microsoft are probably the biggest, and over the last month we have seen them make their moves. We rapidly stop talking about a feature as “AI” when its bundled up with regular software that we use all the time (think: Image recognition on phones, recommendations on Spotify). This year many of us will be using AI without leaving the comfort of Microsoft word. Now is the time to ask: what does this mean doing differently?

James

P.S. A huge thanks to Lewis who (even more than usual) shaped, drafted, challenged and improved this newsletter! Let us know what you think.

If you only read one thing

As evidence of the positive productivity impacts of GenAI accumulates, so too do concerns about the quality of the work it produces. Especially on complex tasks, study after study finds that AI tools improve the work of weaker or newer employees, bumping up average quality in the process. In contrast, the effect on the best performers seems far weaker. The overall productivity gains result from aligning outputs with ‘best practice’, but this narrowing also reduces the frequency of very good results. Recent research indicates that AI-written code has a short life-span, needing to be rewritten before long. And there is ongoing concern about ‘falling asleep at the wheel’, where people accept something that looks ‘good enough’ without taking the time to properly check for quality or inaccuracies – let alone actually put in the work to turn something that’s 80% good into a 100%.

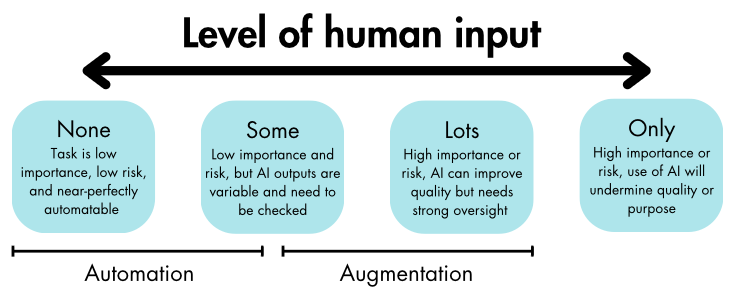

This is worth reflecting on as Microsoft Copilot rolls out (see below). The pull to use AI tools baked into office software to do our writing, presentations and emails will likely feel irresistible. As the best employees turn increasingly to AI tools for the sake of ease and efficiency, exceptionally good work risks becoming even more of an exception. For tasks such as writing up meeting notes, this may not be a problem. But for core products or offerings, or even internal HR policies, you want results that stand the test of time. The output isn’t even always the point: sometimes the value of a task is in the process, deepening the thinking of people working on it, or generating consensus across an organisation through discussion. Depending on the type of task and the intentions behind it, different degrees of automation will be appropriate. In some cases, no automation might even be the best approach. Managers unleashing these tools into their organisations need to think carefully about where tasks sit on this spectrum, and how to incentivise employees to act accordingly when automating has become so easy.

Declining Code Quality. Sources Ignored. Asleep At The Wheel. Risk of Secret Automation.

Fig 1. The spectrum of tasks requiring human input

Contents

What Is GenAI Good For?

Documents, Presentations, Emails and More

Advertising, Code Review, Nobel Prize Literature

Disrupting SEO

How To Successfully Integrate GenAI With Existing Organisations

Tasks - Not Jobs

Idea Generation

Good Work In Government: Finding Value In Unstructured Data

Education

Our Recent Work

Vision Pro Thoughts

Interview with Hays CEO on Future of Work

Zooming Out

Job Displacement - Some Modelling

Elections

Learning More

Useful Stats!

A (borrowed) Roadmap for Implementation

The Lighter Side

What Is GenAI Good For?

Drafting your documents, presentations and emails in-app. With the release of Microsoft Copilot Pro, you can now access GPT-4 directly in Word, Outlook, Powerpoint, Excel and more. And initial analysis indicates that it’s quite good (although the Excel integration needs work). Not all will pay for it straight away, but this potentially affects the work of hundreds of millions of M365 users. The Word functionality may not change much for those already rinsing GPT-4, but it will make use of GenAI to write documents much more widespread (if not ubiquitous) by placing the option right at people’s fingertips. Early reviews suggest that the Powerpoint functionality is especially impressive, creating entire slide decks with images, text, speaker notes and varied layouts in under a minute. $20/mo for this will seem a no-brainer for many. Others will oppose it, for now: out of self-interest, because they’re worried for their own jobs (managers used to consider making a presentation a ‘special skill’, does this reveal them as emperors with no clothes?); or due to concerns about the loss of quality work and human touch (see ‘If you only read one thing’, above). But this ball is rapidly picking up speed as it rolls down the hill. Dodging it will be difficult. Review of Copilot Apps. Managers’ Powerpoint panic. Copilot Superbowl Ad.

The things we already know it’s good for, but done better. New research and releases across domains continue to come thick and fast. In programming, highly specialised models are now being deployed to both identify and fix bugs in code automatically. Google has rolled out a conversational bot to help its customers develop better ads, while Meta claims that its AI-boosted ads have increased campaign returns by 32%. LLMs have been found to match or exceed the accuracy of junior lawyers doing contract review, in a fraction of the time, and at a 99.97% reduction in cost. And Rie Kudan, winner of Japan’s most prestigious literary award, has openly acknowledged that around 5% of her latest sci-fi novel is composed of sentences generated by ChatCPT. Fixing Bugs. Google Ads, Meta Ads. Contract Review. 5% ChatGPT.

SEO isn’t ready for image led search. Google has introduced a new ‘circle-to-search’ feature on Android devices (already heavily advertised on the London Underground). Users can circle anything that appears on their phone screen, in any app, and ask Google for more information about it: what it is, where it came from, why it’s important or popular. This has huge SEO implications. Text-based SEO, now 25 years old, risks becoming redundant in an increasingly visual online culture where you can go straight from image-on-screen to result. How do you make sure, if someone picks out a shot of your product, or something similar to it, that your result comes out on top? Amazon’s new AI shopping assistant, Rufus, raises similar questions: we’ve had years of people optimising Amazon product titles, often to the point of absurdity, but have the goal posts now moved? Circling. Rufus. Auto-Naming Screenshots.

How To Successfully Integrate GenAI With Existing Organisations

Think about tasks, not jobs, when deciding on AI use cases. If you’re considering building an AI application, don’t try to automate jobs in their entirety. Break them down into individual tasks, work out which of the common tasks are amenable to augmentation or automation, then assess how valuable doing this would be in each case. The more specific you are, ideally tying your assessment to the context of a particular organisation, the more successful you will be. This approach was developed in academia for helping understand AI’s impact on the economy, but is increasingly used to help enterprises assess their GenAI opportunities. Note, however, that it won’t help you come up with creative applications of GenAI that are distinct from tasks already carried out by a human. Which AI Applications Should You Build?

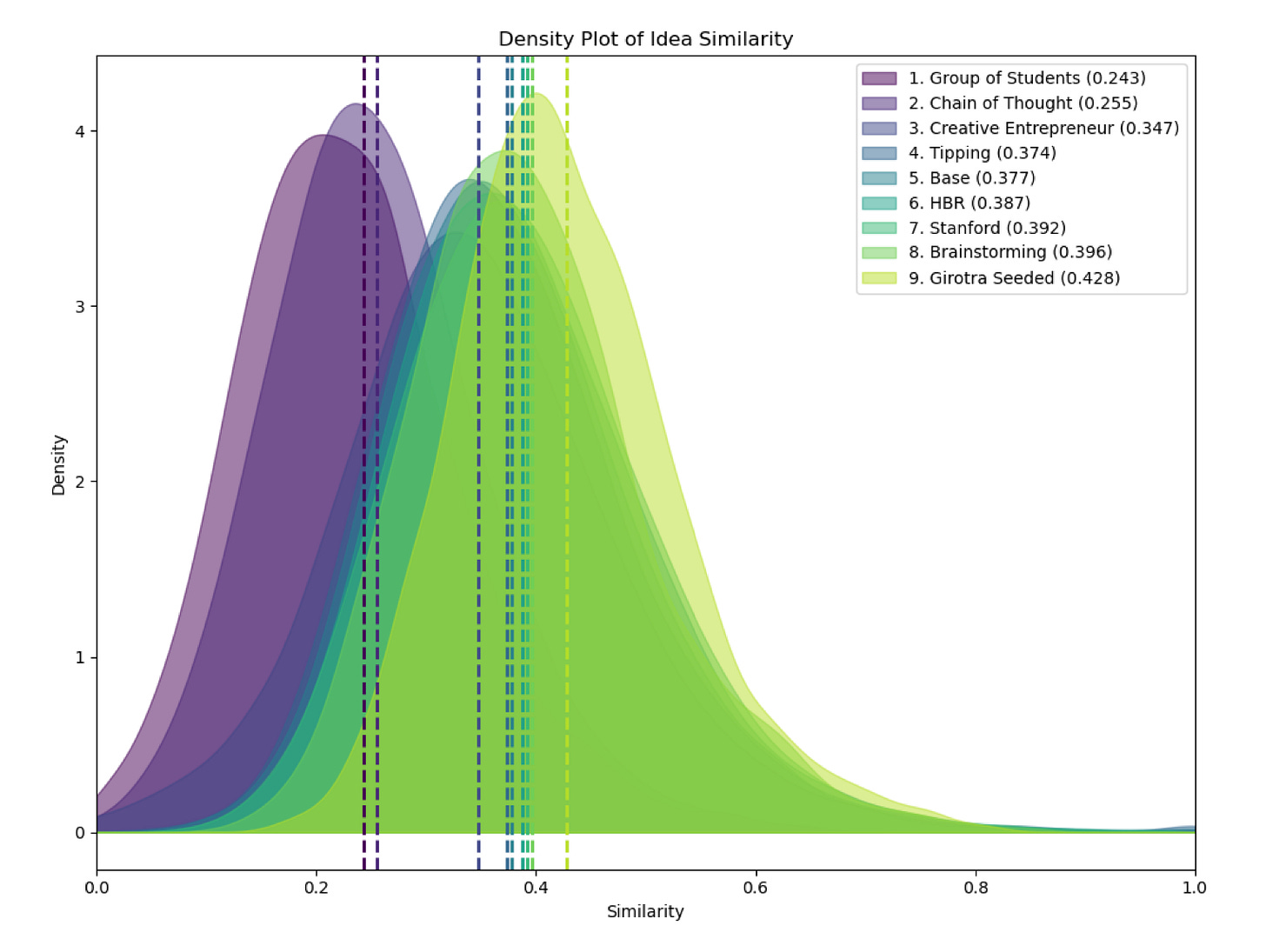

Making AI idea generation match human groups – almost. As with other tasks, the average quality of AI idea generation is high, but limited variance results in less novelty and a lower quality overall ‘best’ idea, relative to the outputs of a human group. New research finds that chain-of-thought prompting – either setting out extra reasoning in the prompt, or encouraging the model to work through its answer step-by-step – can help address this, producing a diversity of ideas which comes close to that created by a group of students, while also generating the highest number of unique ideas of any approach considered. TL;DR, for work where it’s vital that you get the best possible idea, stick to human groups (for now). But if time and money is tight, a well-prompted LLM should get you close. Prompting Diverse Ideas.

Fig 2. Chart showing methods for decreasing similarity of LLM-made outputs

Finding value in unstructured text data to potentially save lives. Led by Ed Flahavan, BIT have been using LLMs to review large amounts of unstructured text data at speed. This particular project focused on publicly available Prevention of Future Death (PFD) reports, automatically generating and analysing robust coded data and pulling out key insights in a fraction of the time that it would have taken human analysts. But there are huge potential applications for reviewing complaints or complex case files in other contexts (e.g. customer interactions, the police, social care). More of this please. Unlocking Unstructured Text.

Growing enthusiasm around GenAI in education. Negative media attention has hampered uptake, but a new UK government document points to the scope for GenAI to make great teachers’ teaching even better and reflects growing recognition of the need to adapt. Meanwhile in the US, OpenAI has partnered with a University for the first time: Arizona State University will have full access to ChatGPT enterprise and plans to use it for coursework, tutoring and research, as well as building a personalised AI tutor and allowing students to create AI avatars for study help. GenAI In Education. OpenAI - Arizona State.

Our Recent Work

We collected some thoughts on the new Apple Vision Pro, outlining a variety of ways in which it might complement developments in GenAI (it’s surprisingly rare to see the two technologies discussed together). Vision Pro.

James spoke to the CEO of Hays about how we see the future of work developing. AI Uptake This Year. Impact On Top Jobs.

Zooming Out

Job displacement to be substantial but gradual, allowing time to mitigate impacts. New research suggests it is not cost effective for most businesses to automate vision tasks that have ‘AI exposure’, with only 23% of relevant worker wages in the US deemed attractive to automate. The cost of implementing AI, after all, is not just in tokens, but in setting up pipelines and infrastructure. For some tasks, this is not yet worthwhile. Job displacement may be more gradual than some expect as a result, creating room for policy and retraining to mitigate unemployment impacts. Beyond AI Exposure.

Around the world in AI elections. At least 64 countries head to the polls in 2024, with AI playing an increasing role. AI-generated imagery has been prominent in the run-up to the Indonesian election this week, for instance, with the leading candidate rebranding as ‘cuddly’ with the help of a Midjourney-created portrait in his campaign materials. According to people in Jakarta, in political circles the main effects of AI have been in Cambridge Analytica-style voter segmentation and targeting online, with little adversarial GenAI or deepfake content – likely reflecting Indonesia’s exceptionally polite political culture, rather than technical capabilities. But the run-up to the Indian elections in April and May may prove a different story. GenAI In Indonesian Elections. AI-aided CCP Propaganda.

Fig 3. Midjourney-generated cartoons of presidential candidates. Source.

Learning More

Microsoft New Future of Work Report 2023: a summary of research on AI in the workplace. Link.

The world’s 150 most used AI tools. Link.

A useful, if curiously-formatted, roadmap to GenAI activities c/o Immr. Link.

The Lighter Side

OpenAI use policy now comes in all shapes and sizes. Link.

AI papers shared by social media influencers get read 2-3x more. Not sure if that is the Lighter Side or a damning statement about the internet. Link.

GolfGPT. Link.

ASML don’t need to advertise but wanted an excuse to play with the tools. Link.

A new AI-choreographed Wayne McGregor ballet. Presumably made by OpenAI’s DBALL·E Model. Link.