Seasons Greetings from San Francisco which I am visiting as part of a few week trip across the Atlantic.

People here are fond of saying “The future is here, it’s just not evenly distributed”, a claim which belies the confidence of a city that feels it is leading the world, that is master of change, rather than buffeted by it. It's hard to dismiss. As a now recent veteran of three driverless taxi rides what is remarkable is how normal the experience felt. A reminder, perhaps, that we as individuals have got very used to accepting the impacts of new technology on our personal lives. Another in a long list of technologies that go from magical, to barely worthy of note in a short period of time.

It’s the time of year for retrospectives and forecasts. I haven’t added to the dozens in circulation (several are linked below) but a number of conversations this past week have centred on “where are we?”. I’ll leave a detailed (and tech focused) answer for face-to-face conversations in the New Year.

I do, however, want to allow myself a quick inward looking retro, at the end of a first full year running Paradigm Junction. In the past 12 months I’ve learnt more than I could ever have imagined from the clients, researchers, contacts and friends I have had the chance to work with. I’m in the process of hiring, which will bring excitement and change along a wholly different dimension. I’ve grown more certain that technology is changing how we all work, and that helping others to navigate this change, profitably, but with care for the people affected, is a worthwhile undertaking. I’ve loved almost every minute. Thanks for your role in all of this, from the feedback and questions, to shares and introductions. It has made this possible, and meant a lot.

Wishing you an energising period of rest over the next few weeks, whatever you are up to.

James

If You Only Read One Thing

The Three Wise Men, not the Three Know-it-alls? Passing an exam is about proving what you know, intelligence is about knowing what to do with it. Knowledge is knowing that a tomato is a fruit, wisdom is knowing not to put one in a fruit salad. Exams ask questions with neat answers; the world doesn’t. Street smarts will save your life; book smarts help you write about it.

Maxims like these are important to keep in mind as we adjust to a world with a dramatic increase of available intelligence - or more precisely a sometime substitute for it.

One of the most frustrating aspects of using AI tools is how often they produce answers that feel like they don’t quite get it. An even more frustrating aspect of reading AI commentators is when they confuse passing tests or professional exams for proof that the models do. We evaluate models on tasks which are easy to evaluate, passing legal exams rather than practising law. This gives rise to a gap, which needs filling with a new skill: knowing how to wield these tools.

Similarly, I regularly read claims that AI tools will let us overcome institutional knowledge gaps, making our organisations more efficient in the process. Implicitly, they assume that poor knowledge is what holds us back from improving (Aside: this is a very Californian perspective, where the emphasis on self-knowledge as a path to self-improvement feels quite jarring as a British outsider). But, to quote a favourite Silicon Valley maxim: ideas are cheap, execution counts. Where AI tools give us a surfeit of ideas for improvement, it's making the change stick that will count, not having the idea. Being discerning about which ideas are worth implementing will be a necessary skill, and one that comes from outside the models, not within.

It may be possible - in time - to train models to display this wisdom. An excellent paper last month described the role of metacognition (such as intellectual humility, flexibility, appropriate deference to expertise) in solving complex real world problems. Whilst I personally am sceptical that there exists a sufficient dataset to train models in these behaviours, this isn’t a newsletter about training AI models, it's about using them.

As the new year gives many people a moment for pause, reflection and planning, the question I’d like to lead with next year is this: where in our work is intelligence what matters, and where - with the attention of a wise eye - is it really something else?

Evaluating what is easy. AI as Manager, Coach or Panopticon. The wisdom to know when to use AI. Paper: Metacognition.

Contents

If You Only Read One Thing

Knowledge, Intelligence and Wisdom

What Is GenAI Good For?

Skills Audits and AI Engineers

New Data, Old Stories

How To Successfully Integrate GenAI With Existing Organisations

The Importance of Plumbing

Evaluating ROI

The Value of Saving An Hour

Zooming Out

Incumbents Get Younger

Startups Get Squashed

Global Conversations

End of Year Trends

Learning More

Agents and Automation

Presentations & Guides

The Lighter Side

What Is GenAI Good For?

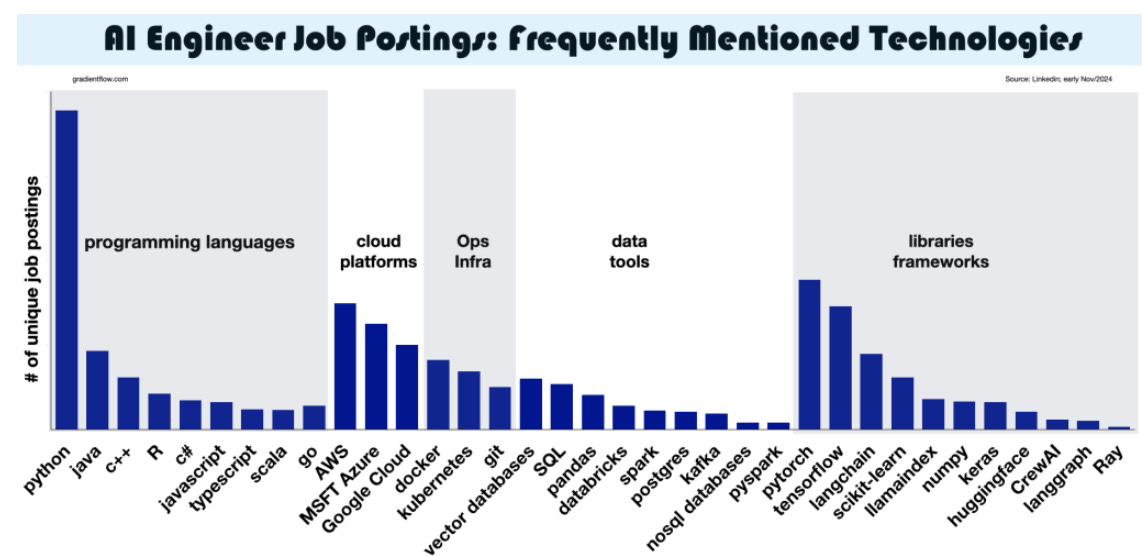

Learning at scale. A fun and innovative approach to answering the question: What skills are needed to be an AI Engineer. Ben Lorica takes an approach I’ve seen applied usefully in a few organisations now - using an LLM to read all available job descriptions to find common themes. If you’re contemplating a job switch in the new year then work like this might tell you what to aim for (or emphasise in job applications). Why read one example, when you can read them all. Article.

New Data, Same Old Stories:

A study of 187k developers shows AI transforms how we work. More time spent on output, less on management. More experimentation. Higher impact for lower skilled workers. These combine to give higher headline productivity stats. Paper.

Massive volume increases for any process which is exposed to the internet. A quarter of campus recruitment teams saw applications jump more than 10%. Amazon reports cyber attacks growing from 100m to 750m per day, in just 7 months. Recruitment. Cyber.

How To Successfully Integrate GenAI With Existing Organisations

Forward looking companies (and Harvard Business Review) are asking: how will this work differently? If the GenAI wave is meaningful, then commerce in 5 years won’t look like now with a sprinkling of AI assistants - routine interactions will fundamentally have changed. Examples of previous shifts are now so commonplace as to be almost unnoteworthy, but consider how differently we searched and paid for services a decade ago. The yellow pages feel very quaint. Designs for how internet-based commerce will work a decade from now are starting to be laid down, and if you are dependent on the internet for getting custom (you are) then you should take note. How will people interact with applications differently? What does it mean to make a website legible to an AI agent? How are these agents influenced and what comes after SEO? How do agents pay for things in a technically secure way? On what legal basis? Unless you are building a tech firm, the answers probably aren’t yours to write - but you don’t want to make big investment decisions that end up on the wrong side of the wave, lest you are stuck with technical debt for years to come. HBR: Strategy in an age of abundant expertise. Roles needed for GenAI Applications. Agent responsive design. Writing for an LLM to read. Payments by agents.

ROI Evaluation: AI projects must pay their way, but there are intangible benefits too. An excellent framework from the consistently insightful Tobias Zwingmann to help avoid AI projects overpromising and under delivering. Financial gains can be multiplied to recognise diffuse but positive impacts (like better customer experience) or dampened to account for risk (how likely are we to pull this off). But these only multiply the expected recurring profit. If this isn’t above 0 to begin with, then don’t spend the money to get the feel good factor. The full framework is worth a read, particularly in the context of growing project costs (to combat hallucinations, security etc) even as model costs fall. The AI Profit Stack. Growing project costs.

Related: The Value of Saving An Hour. To repeat one of my favourite analogies - autopilot flies the plane for 99% of the journey, but you still need to pay for a pilot (or even two) to be there the whole time. Most employees don’t work at peak capacity, but are paid for a week’s work so they are on call when needed or to work flat out for intermittent busy periods. In this sense, employment looks more like a retainer. But if a member of my team takes 20h a week to do their work, and isn’t doing useful work the other 20h of the time, then tools which speed this up plausibly offer no cost savings at all. How do you factor this into value calculations? Who in your organisation does this (over)simplified model of work describe? And can you have that conversation openly? Pricing the Water Cooler Chat. Twitter thread on WFH and time saving.

Zooming Out

Large companies are getting younger, breaking a decades long trend. This is the combination of digital technologies allowing startups to scale, whilst long standing giants of industry fail to keep up. Intel left the Dow Jones this month in favour of NVIDIA. Are these stories that your board are familiar with? Analysis. Discussion.

Startups are getting squashed as LLMs do more and more themselves. A catch-22: what should I build, given the risk that OpenAI and Google might release it as a feature 6 months down the line? Discussion.

NYT Dealbook Summit. As good a claim as any to be the leader of the global conversation for next year. Videos.

End of Year Trends. It feels like there is a flood of roundup pieces, far too many to read. So, of course, people are asking GenAI to summarise them. Whether the summary is insightful depends on how closely you’ve followed this year. As ever, it's not a trivial question to ask whether automation removes the value here. Are these reports useful to write, read, share or something else? Summary of reports I read. Capgemini. Wharton. Slalom. 155 more (via Ben Evans).

Learning More

Agents and Automation. How do you transition from a chatbot that knows everything, to a piece of software that can carry out useful tasks? This explainer (aimed at HR professionals) and video tutorial (for building a tool that produces pre-meeting briefings based on a person’s online presence) are a good place to start getting a hands-on feel for this. Mildly technical, but very well explained. Explainer. Tutorial.

Ben Evans’ annual presentation. Always the first person I recommend when people ask which newsletters they should subscribe to. Presentation.

Tips for the final 10%. How do you coax AI tools to produce work that is close to a standard people will pay for, rather than good for an amateur but professionally uninspiring. Guide.